Recruiter Match Score

AI-powered recruiting management tool to reduce recruiter screening time and improve candidate quality.

Impacts

↓ 81% screening time

↓ 50% screening effort

↑ 80% strong interviews

Role

Product Designer (1 of 2)

Owned candidate evaluation workflow

Team

PM, 8 Engineers, 1 Data Scientist

Timeline

9 months

01 — Context

The Problem

Recruiters were manually screening hundreds of resumes per role, resulting in long hiring cycles, burnout, and inconsistent candidate quality.

The process was labor-intensive and fragmented. Recruiters relied heavily on manual review and intuition, with limited data support to help prioritize strong candidates early in the funnel.

Opportunity

- Reduce manual screening effort

- Surface high-quality candidates faster

- Build trust in AI-assisted decision making

Success Metrics

- Average time to screen a candidate

- Adoption and satisfaction among recruiters

- Interview-to-hire ratio

02 — Alignment

Aligning on Goals

Before jumping into features, we ran a working session to clarify recruiter pain points and explore possible directions. I hosted and facilitated the workshop to help the team step back and define what problem we were actually solving.

Brainstorming & Clustering

I facilitated cross-functional brainstorming sessions across product, go-to-market, and stakeholders from SuccessFactors. Together, we evaluated opportunities based on user value, business impact, and technical feasibility, narrowing a broad set of ideas into a focused set of problems worth solving first.

Prioritization

Rather than trying to solve everything at once, we aligned on a clear starting point: reduce the time recruiters spend manually screening candidates. Several ideas — including advanced analytics and full ATS redesign — were intentionally deferred to ensure we could ship a focused MVP and validate core assumptions quickly.

03 — Research

Insights That Shaped the Product

Together with another designer, I co-led research from early discovery through post-launch iteration. This work clarified where to focus, validated key decisions, and informed the roadmap after release.

Discovery Research

We conducted interviews with recruiters, candidates, and customers to understand where the recruiting process was breaking down and where automation could add value.

Recruiters described a labor-intensive screening process, limited visibility into candidate quality, and frequent context switching across tools. This reinforced our focus on reducing manual screening time and integrating seamlessly into existing workflows.

Concept Validation

With early design explorations in place, I ran usability sessions to test match scoring, explainability, and integration models.

Recruiters were open to automation but cautious about disruption. They needed clarity around how scores were generated and flexibility to override decisions. These findings helped narrow the feature set and avoid unnecessary complexity.

MVP Feedback

After launch, we gathered feedback from recruiters using the product in real workflows.

Usage patterns confirmed that integration and transparency were critical to adoption. Recruiters reported measurable time savings and expressed interest in deeper analytics and candidate rediscovery — signals that directly informed the next iteration.

04 — Design

Translating Strategy into Product

With direction and scope defined, the focus shifted to building a system recruiters would adopt. The final product evolved through four key design decisions.

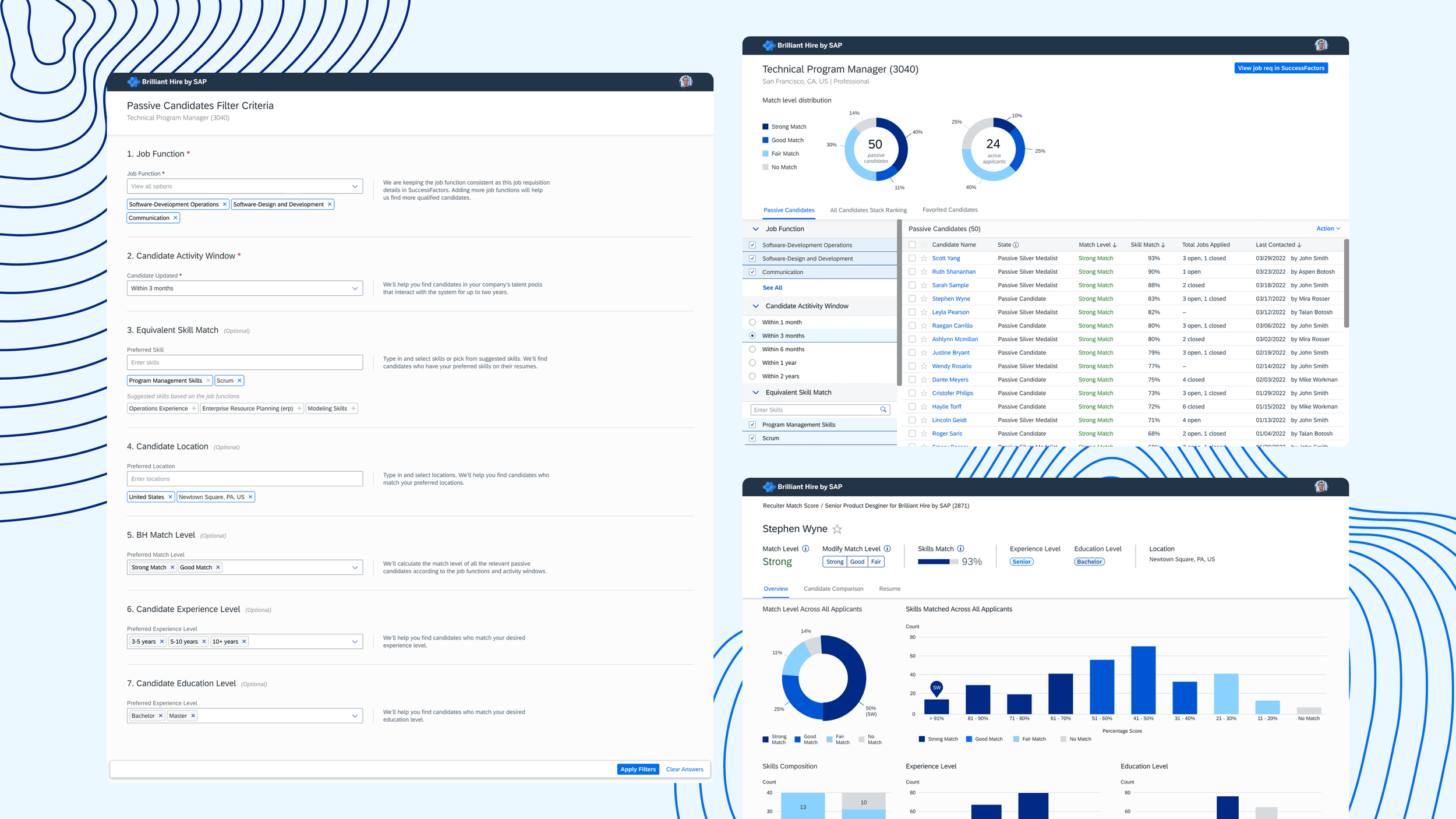

Seamless Integration

Adoption was the biggest risk. We initially explored a standalone experience that offered greater design flexibility, but usability testing revealed recruiters were reluctant to adopt a separate system. Although deeper integration limited some visual freedom, embedding match scores directly into SuccessFactors significantly reduced workflow disruption and improved adoption confidence.

Trust Through Transparency

Match scoring was central to the individual candidate experience, but a number alone wouldn’t build trust. Early testing revealed skepticism toward algorithmic prioritization. I introduced transparent breakdowns, override controls, and feedback loops to ensure recruiters understood the score and stayed in control. Trust came from clarity, not complexity.

Closing the Workflow Loop

The initial MVP surfaced insights but stopped short of enabling action. Within the individual candidate flow, I introduced favoriting and comparison tools that synced decisions back to SuccessFactors. This removed workflow breaks and supported structured discussions with hiring managers.

Proactive Candidate Discovery

Screening new applicants solved only part of the problem. In collaboration with my design partner, we expanded the system to include ATS mining and a guided rediscovery flow, enabling recruiters to surface strong past candidates quickly. This shifted RMS from reactive screening to proactive talent discovery.

05 — Results

Measurable Impact

81%

Reduction in average time to screen a candidate. (17 days → 3.2 days)

50%

Reduction in screening effort. Recruiters reviewed roughly half as many candidates to find the right hire.

80%

Of completed interviews were rated “strong” or “good”. Interview time was concentrated on higher-quality candidates.

Customers reported that match results aligned with their expectations and that the product was easy to integrate into their workflow. Feedback consistently pointed toward expanding data visibility and enabling proactive candidate discovery — insights that directly informed the next phase of the roadmap.

06 — Reflection

Key Takeaways

Having a clear product direction makes everyday decisions easier

This was my first experience contributing to product direction rather than designing from a fixed set of requirements. I saw how much easier design decisions became, once the team agreed on what we were trying to achieve. Without that clarity, it’s easy to spend time polishing features that don’t actually move the product forward.

An AI tool only works if users trust it

I initially thought the success of an AI recruiting tool would depend mostly on the quality of the model. Through research and testing, I realized recruiters cared just as much about understanding the score and feeling in control of the outcome. Designing ways to explain results and allow overrides made a bigger difference than adding more complexity to the model.

Good design judgment means knowing when to stop researching and ship

Earlier in my career, I felt pressure to validate every decision before moving forward. This project taught me that there’s a point where more research stops being helpful. Shipping a focused MVP, gathering real feedback, and iterating based on actual use proved far more effective than waiting for perfect certainty.

Next project