Recruiter Match Score

AI-powered recruiting management tool to reduce recruiter screening time and improve candidate quality.

Role

Lead Product Designer

Team

PM, 8 engineers, 1 data scientist

Timeline

9 month

01 — Context

The Problem

Recruiters were manually screening hundreds of resumes per role, leading to long hiring cycles, burnout, and inconsistent candidate quality.

Our challenge was to design a system that could adapt to different research workflows while maintaining a consistent, distraction-free environment. The interface needed to handle complex information architecture without overwhelming users with cognitive load.

Opportunity

Every time researchers switched between a source document and their notes, they lost valuable context and had to mentally reconstruct their train of thought.

- Established basic information architecture

- Implemented core navigation patterns

- Created initial component library

Success Metrics

Users relied on 4-5 different tools for reading, annotating, organizing, and writing—each with different mental models and no interoperability.

- Time spent per candidate

- Recruiter satisfaction

- Interview-to-hire ratio

02 — Alignment

Aligning on Goals

To avoid jumping into features too early, we ran a working session to map out recruiter pain points and explore possible directions. I hosted and facilitated the workshop.

Brainstorming & Clustering

After generating and clustering ideas, the next step was deciding what to focus on first. I worked closely with product, go-to-market, and stakeholders from SuccessFactors (where we access the candidate data) to prioritize opportunities based on user value, business impact, and technical feasibility. This helped us narrow a broad set of ideas into a small number of problems worth solving in the first release.

Prioritization

From there, we aligned on a clear starting point. Instead of trying to solve everything at once, we focused on reducing the time recruiters spent manually screening candidates. This became the foundation for the MVP and guided feature decisions moving forward.

03 — Research

Research & Validation

Research was conducted throughout the project to guide early direction, validate key ideas, and inform iteration after launch.

Discovery Research

Early interviews with recruiters, candidates, and customers helped us understand where the recruiting process was breaking down and where automation could add the most value.

This work shaped the initial product direction and helped us focus on reducing manual screening. We found that recruiters were experiencing a lot of frustration with the current process and were eager to see a more automated solution.

Concept Validation

Once we had early design explorations, I ran user testing sessions with recruiters to validate key ideas such as match scoring, explainability, and workflow integration.

This helped us narrow the feature set and avoid investing in ideas that created friction or confusion. We found that recruiters wanted a system that was easy to use, understand, and integrate into their existing workflow.

MVP Feedback

After launch, we collected feedback from recruiters using the MVP to understand whether the product fit into their daily workflow and whether the results aligned with expectations.

This feedback directly informed iteration plans and future roadmap decisions. We found that recruiters were happy with the product and were able to use it to reduce their manual screening time.

04 — Process

Design Evolution

The design evolved through four major iterations, each informed by user feedback and usability testing.

Initial Prototype

The first iteration focused on establishing core functionality. We prioritized getting the basic flow working before addressing visual refinements.

MVP

Based on initial user testing, we identified key pain points in the navigation flow and addressed them with a reorganized structure.

Production Release

The final release incorporated learnings from all previous iterations, resulting in a cohesive experience that met both user needs and business objectives.

05 — Solution

The Final Design

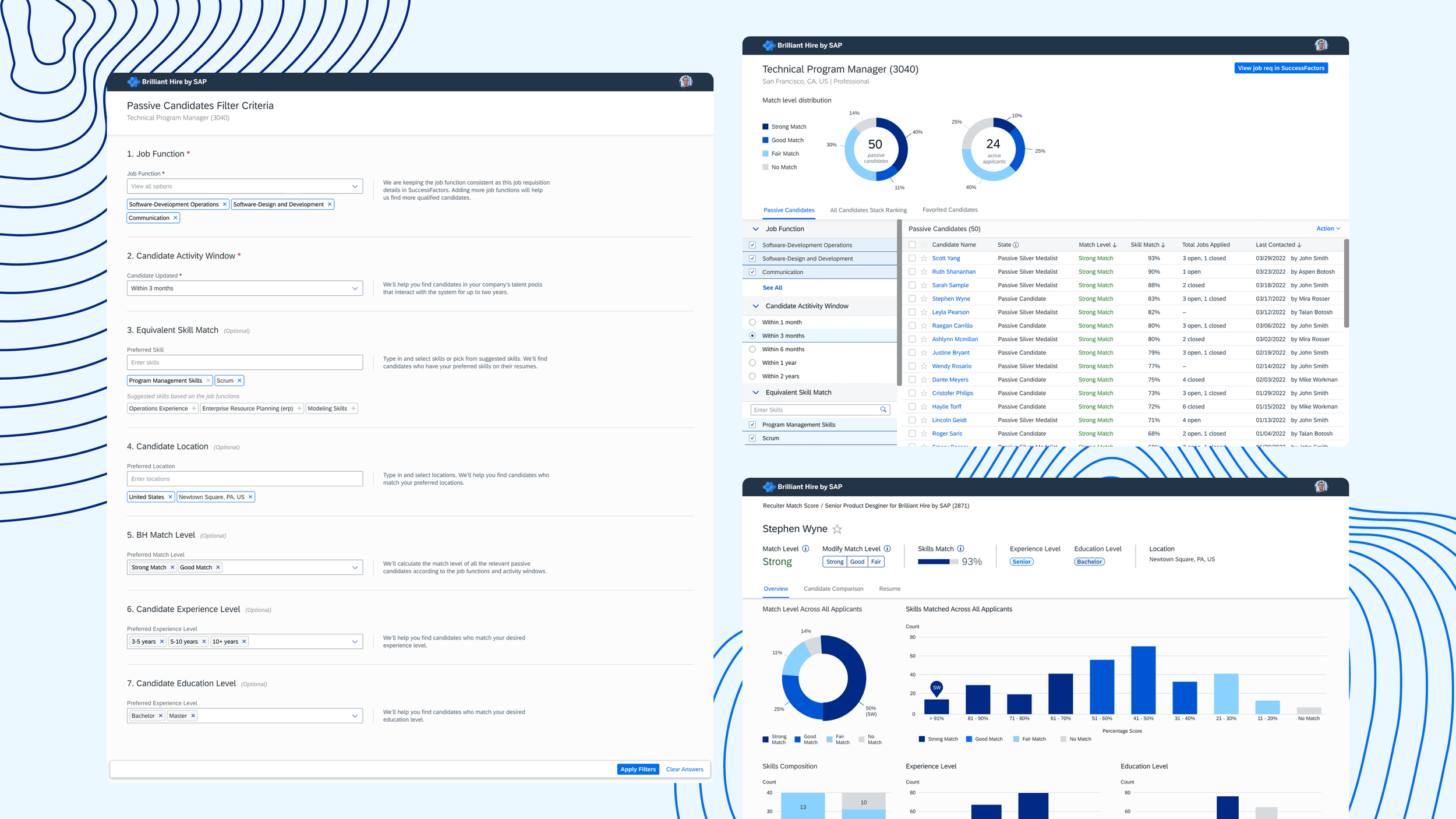

The final design successfully bridges reading and writing activities through a flexible, panel-based interface that adapts to different research workflows.

Seamless Workflow

Knowingly, there was a step missing in the current MVP flow and this was a trade-off we made together with a team after considering different constraints. Once a recruiter lands on a candidate that he/she liked, the flow was interrupted, and no further action could be taken. With this upgrade, recruiters can now “favorite” candidates in BH. This information will then be communicated seamlessly back to SFSF so they can continue with their next step there. In addition, knowing recruiters usually are asked to present the top candidates back to hiring managers at the end of the screening process, I brought back the candidate comparison table that was well received in previous user studies.

Rediscovering Past Candidates

To make sure visibility is given to candidates that are most wanted by recruiters, I created a simple questionnaire to quickly narrow down on options. Although a total of 7 questions are shown, only two are required to speed up the experience while giving recruiters more flexibility. Once landed on the recommendation page, all filters can be easily accessed again on the lefthand side, granting recruiters the freedom to tweak their searches accordingly.

06 — Results

Measurable Impact

80%

Reduction in average time spent to screen a candidate. (17 days before vs. 3.2 days after using RMS)

50%

reduced screening effort. Recruiters are focusing on the most qualified candidates and need to go through only half the usual amount of candidates to find the right hire.

80%

of the interviews completed were with “strong“ or “good“ candidates. Interviewing time is spent with the most qualified candidates.

We have received positive feedback from customers who have adapted the RMS solution. They confirmed that our match results aligned with their expectations and the product was easy to use. In general, customers were requesting us to provide more data. And on top of that, there was a strong desire for not only candidate analysis but also candidate discovery. Most of the asks are already included in the product roadmap for the next iterations which reassures us we are heading in the right direction.

06 — Reflection

Key Takeaways

Having a clear product direction makes everyday decisions easier

This was my first time being part of shaping a product direction instead of designing from a fixed set of requirements. I saw how much easier design decisions became once the team agreed on what we were trying to achieve. Without that clarity, it is easy to spend time polishing features that do not move the product forward.

An AI tool only works if users trust it

I initially thought the success of an AI recruiting tool would depend on the quality of the model. Through research and testing, I realized recruiters cared just as much about understanding the score and feeling in control of the outcome. Designing ways to explain the results and let users override the system made a bigger difference than adding more complexity to the model.

Good design judgment means knowing when to stop researching and ship

Earlier in my career, I felt pressure to validate every design decision before moving forward. This project taught me that there is a point where more research stops being helpful. Learning to ship an MVP, gather real feedback, and iterate helped the team move faster and build something people actually used.

Next project